Search the web with GPT for Sheets

Search the web directly in GPT for Sheets using web search models. These models use fresh web search data, which allows you to generate up-to-date responses about companies, current events, facts, and more, directly in your spreadsheet. You can also fetch content from specific URLs to extract information from web pages.

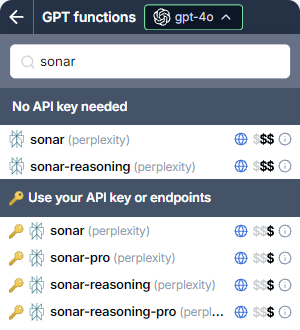

Choose the right web search model

Choose a web search model that best fits your use case.

Web search

Get information that is not in the model's built-in knowledge:

-

Example: Finding the current CEO of a company.

-

How it works:

All web search models can go beyond their built-in knowledge by accessing information from search engines, like Google or Bing. This allows models, which have compressed historical knowledge, to explore topics in greater depth, with more current data or with a specific focus.

Web search models do not fetch full page content. Instead, they read search result snippets, like you would see on a Google search results page. The snippets are added to the context of the models, so they can use the information to generate a response.

-

Recommended models:

-

Sonar for quick results (cheapest).

-

Sonar Pro or Sonar Reasoning or GPT-4o Search models for high quality results.

-

Gemini models with Web search for Google search quality (most expensive).

-

Content fetching

Focus on specific URLs and extract content from them:

-

Example: Extracting the product name and price from the URL of a product page.

-

How it works: Some web search models can access the full content from specific URLs. The full content is added to the context of the models, so they can use it to answer questions about the content or extract information from it.

-

Recommended models:

Click here for a list of all web search models supported by GPT for Sheets.

| Provider | Model | Without API key | With API key |

|---|---|---|---|

Perplexity | sonar | ✅ | ✅ |

sonar-pro | ✅ | ✅ | |

sonar-reasoning | ✅ | ✅ | |

sonar-reasoning-pro | ❌ | ✅ | |

gemini-2.0-flash | ❌ | ✅ | |

gemini-2.0-flash-lite | ❌ | ✅ | |

gemini-2.5-flash | ✅ | ✅ | |

gemini-2.5-flash-lite | ❌ | ✅ | |

gemini-2.5-pro | ❌ | ✅ | |

OpenAI | gpt-4o-mini-search-preview | ✅ | ✅ |

gpt-4o-search-preview | ✅ | ✅ | |

gpt-5-search-api | ✅ | ✅ |

For detailed pricing, see AI providers and models.

Use web search models

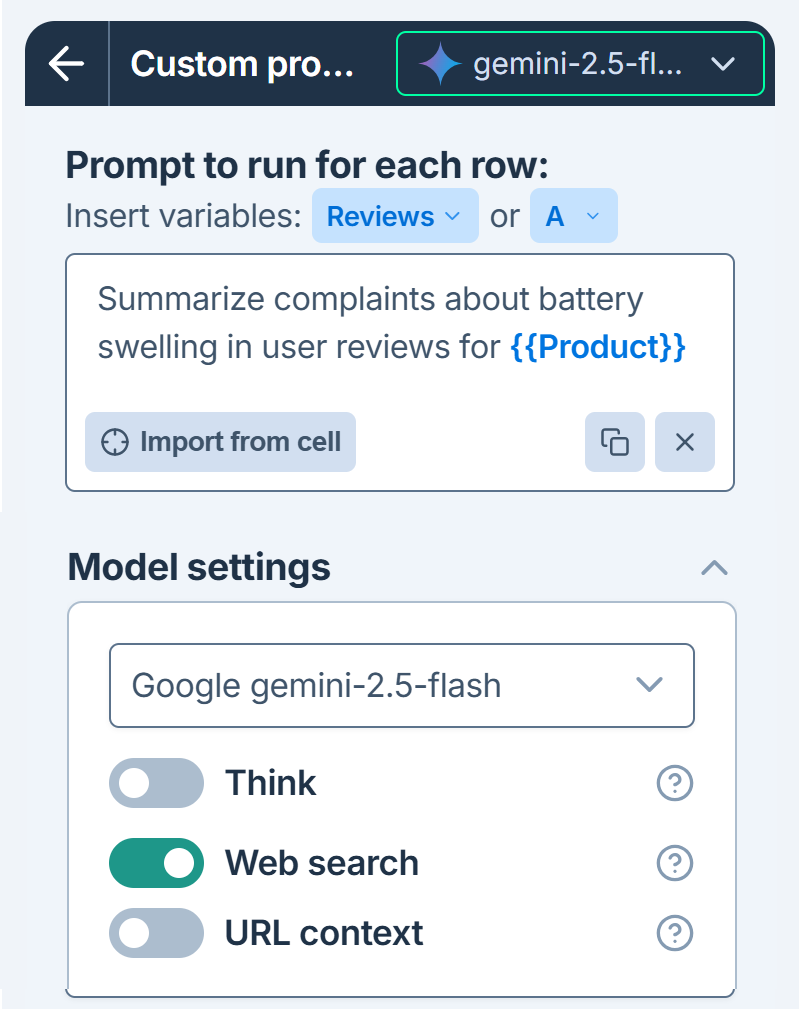

- Bulk tools

- GPT functions

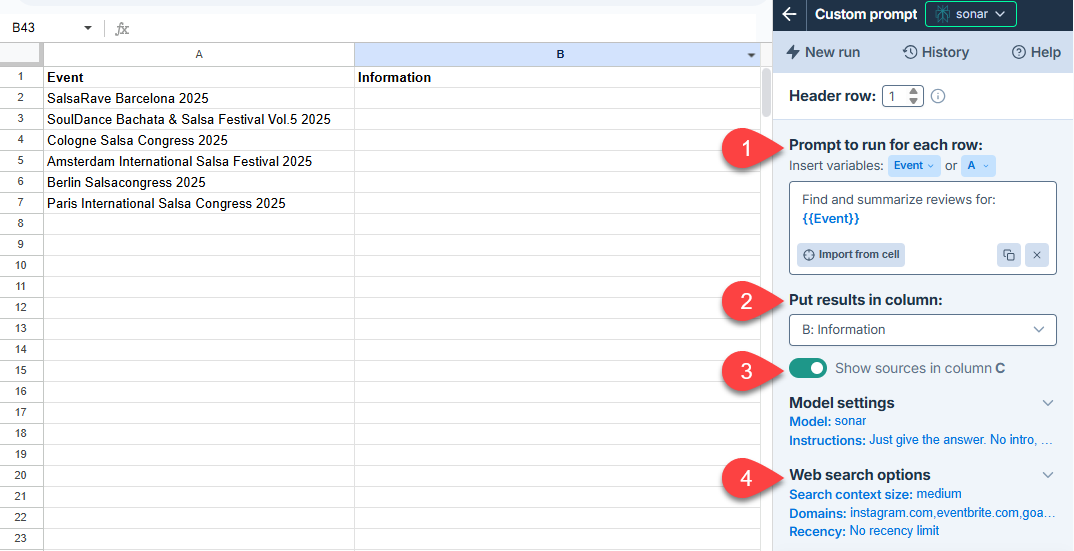

Use bulk tools to perform web searches with any model that has web search capabilities (🌐).

-

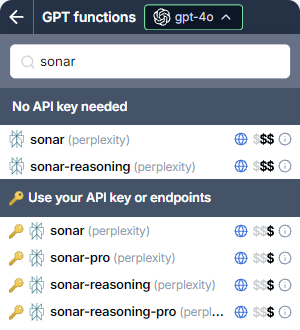

In the sidebar, select Bulk AI tools and click the tool you want to use.

-

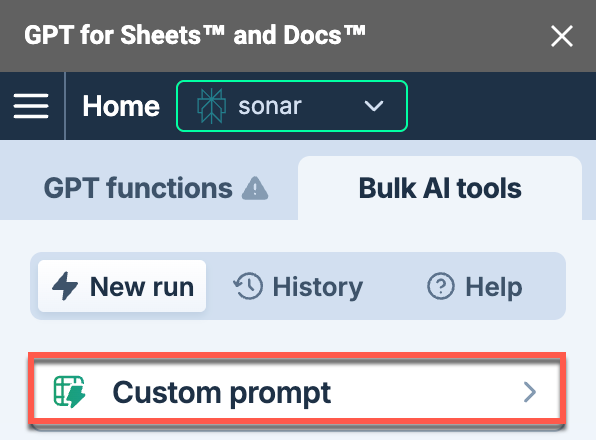

Expand the model switcher and select a web search model (indicated by the 🌐 icon).

-

(Optional) Configure the model-specific settings in the sidebar. For Gemini models, make sure web search is enabled.

-

Set up your bulk tool run. For example, to set up a Custom prompt run:

Field Description Example

Prompt to run for each row

Enter the prompt you want to run for each row.

Find and summarize reviews for: {{Event}}.

Put results in column

Select the column to put the results in. Cells in these columns won't be overwritten with the results if they contain text.

B: Information

Show sources

Enable this option to write source references in the column to the right of the results column. Enabled

-

Click Run rows.

Your search results appear in the output column. If you enabled source references, they appear in the adjacent column.

For instructions on how to use the tools, see Bulk AI tools.

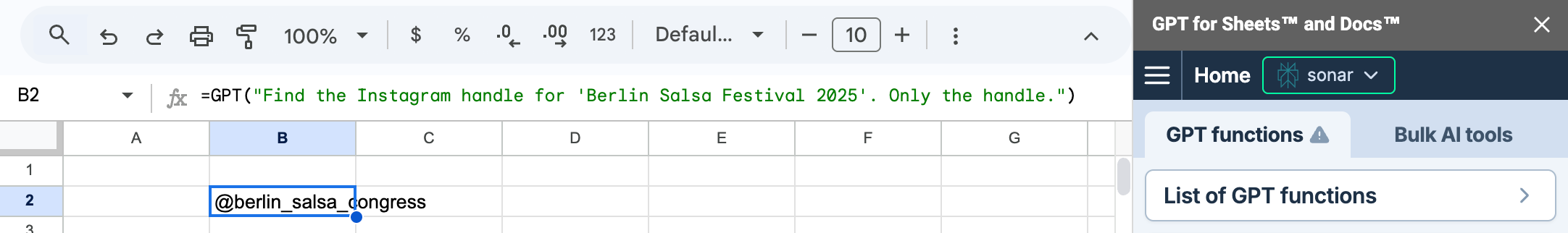

Use GPT functions to perform web searches with any model that has web search capabilities (🌐).

Using the model switcher

-

In the sidebar, select GPT functions.

-

Expand the model switcher and select a web search model (indicated by the 🌐 icon).

-

(Optional) Configure the model-specific settings in the sidebar. For Gemini models, make sure web search is enabled.

-

In the spreadsheet, select a cell and write your formula:

=GPT("Find the Instagram handle for 'Berlin Salsa Festival 2025'.")

The formula uses the web search model selected in the model switcher.

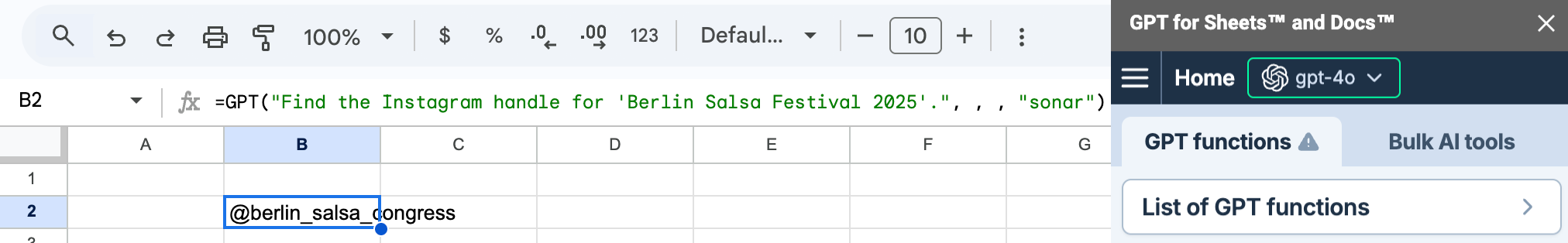

Specifying the model in the formula

-

In the sidebar, select GPT functions.

-

(Optional) Configure the model-specific settings in the sidebar. For Gemini models, make sure web search is enabled.

-

In the spreadsheet, select a cell and write your formula, specifying a web search model directly in the formula, for example the Perplexity Sonar model:

=GPT("Find the Instagram handle for 'Berlin Salsa Festival 2025'.", , , "sonar")

The formula uses the web search model regardless of what's currently selected in the model switcher.

For instructions on how to write formulas with GPT functions, see GPT functions.

Model-specific settings

Use the model-specific settings to configure the web search model for your use case.

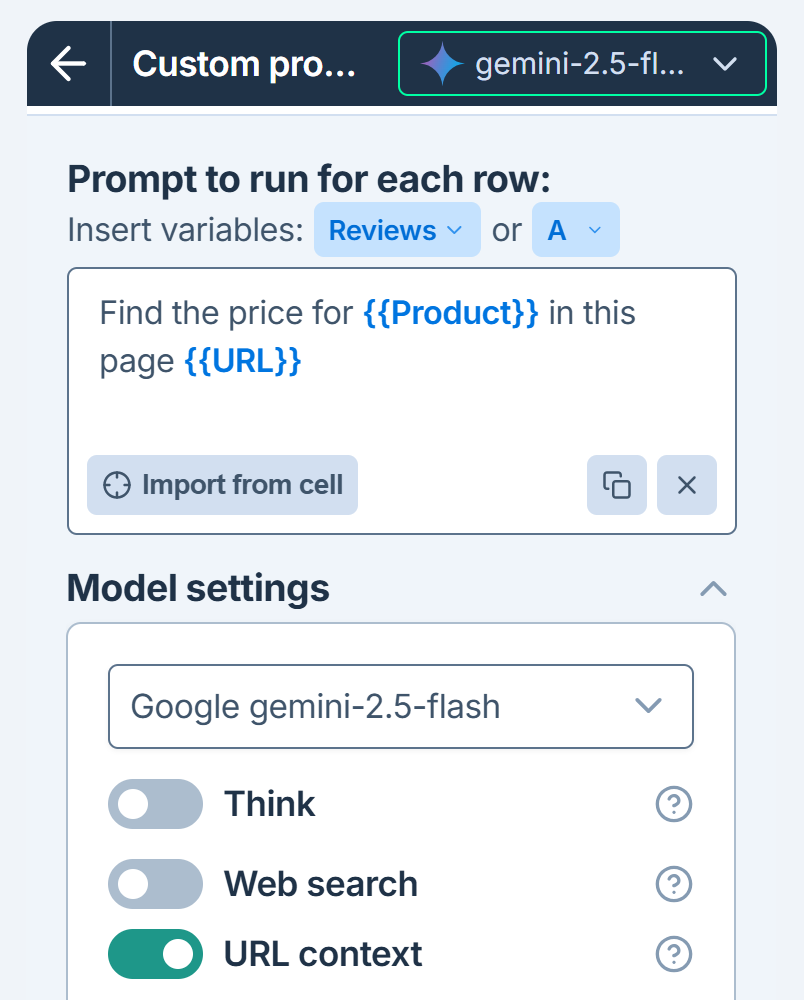

- Google Gemini

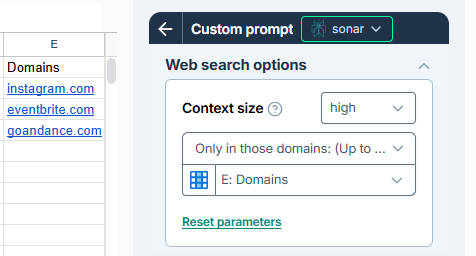

- Perplexity Sonar

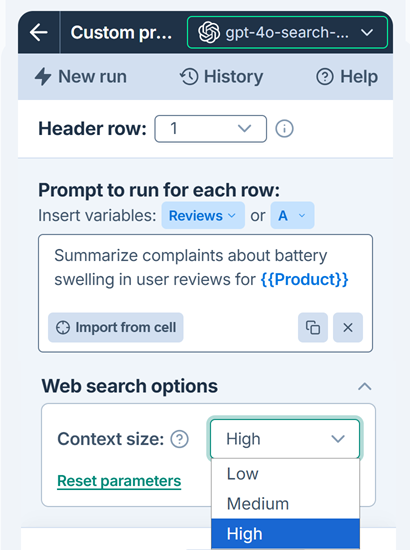

- OpenAI GPT-4o Search

| Setting | Description | Example |

|---|---|---|

Web search | Let Gemini decide when a Google search can improve the answer. This allows Gemini to provide more accurate answers and cite verifiable sources beyond its built-in knowledge. Enabling Web search incurs an additional search cost. |  |

URL context (Gemini 2.5 only) | Provide up to 20 URLs (pages, images, PDFs) to focus your search. No search cost is incurred, but the content retrieved from URLs counts as input tokens. |  |

| Setting | Description | Example |

|---|---|---|

Context size | Choose how much content to retrieve from each source. Larger sizes produce richer and more detailed responses at a higher cost. |  |

Domains (Bulk tools only) | Specify up to 20 domains to generate responses based only on search results from these domains. Use simple domain names (e.g., |

| Setting | Description | Example |

|---|---|---|

Context size | Choose how much content to retrieve from each source. Larger sizes produce richer and more detailed responses at a higher cost. |  |