Reduce AI response repetition with OpenAI models in GPT for Docs

Set presence and frequency penalties in GPT for Docs to reduce the tendency of OpenAI models towards repetition.

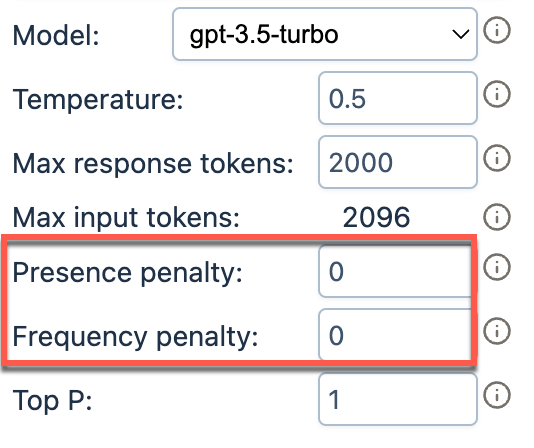

| Parameter | Definition |

|---|---|

| Presence penalty | Penalizes new tokens based on whether they appear in the text so far. Higher values encourage the model to use new tokens, that are not penalized. |

| Frequency penalty | Penalizes tokens based on their frequency in the text so far. Higher values discourage the model from repeating the same tokens too frequently . |

| Token | Tokens can be thought of as pieces of words. During processing, the language model breaks down both the input (prompt) and the output (completion) texts into smaller units called tokens. Tokens generally correspond to ~4 characters of common English text. So 100 tokens are approximately worth 75 words. Learn more with our token guide. |

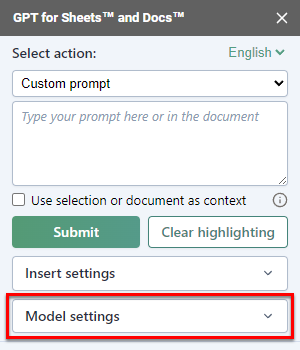

In the GPT for Docs sidebar, click Model settings.

Set Presence penalty and Frequency penalty from 0 to 2.

You've set the Presence penalty and Frequency penalty. GPT for Docs now uses the new Presence penalty and Frequency penalty values for generating all responses.

What's next

Configure other settings to customize how the language model operates.