Set up LM Studio on Windows

This guide walks you through the main steps of setting up LM Studio for use in GPT for Work on Windows.

This guide assumes that you use GPT for Work on the same machine that hosts LM Studio.

Prerequisites

Windows user account with administrator privileges

Installed software:

curl (ships with Windows)

To set up LM Studio on Windows:

Install LM Studio and download a model

Download and run the Windows installer. Follow the on-screen instructions to complete the installation.

The installer sets LM Studio to start automatically as a background service on system boot.

Run LM Studio.

On the welcome screen, click Skip onboarding.

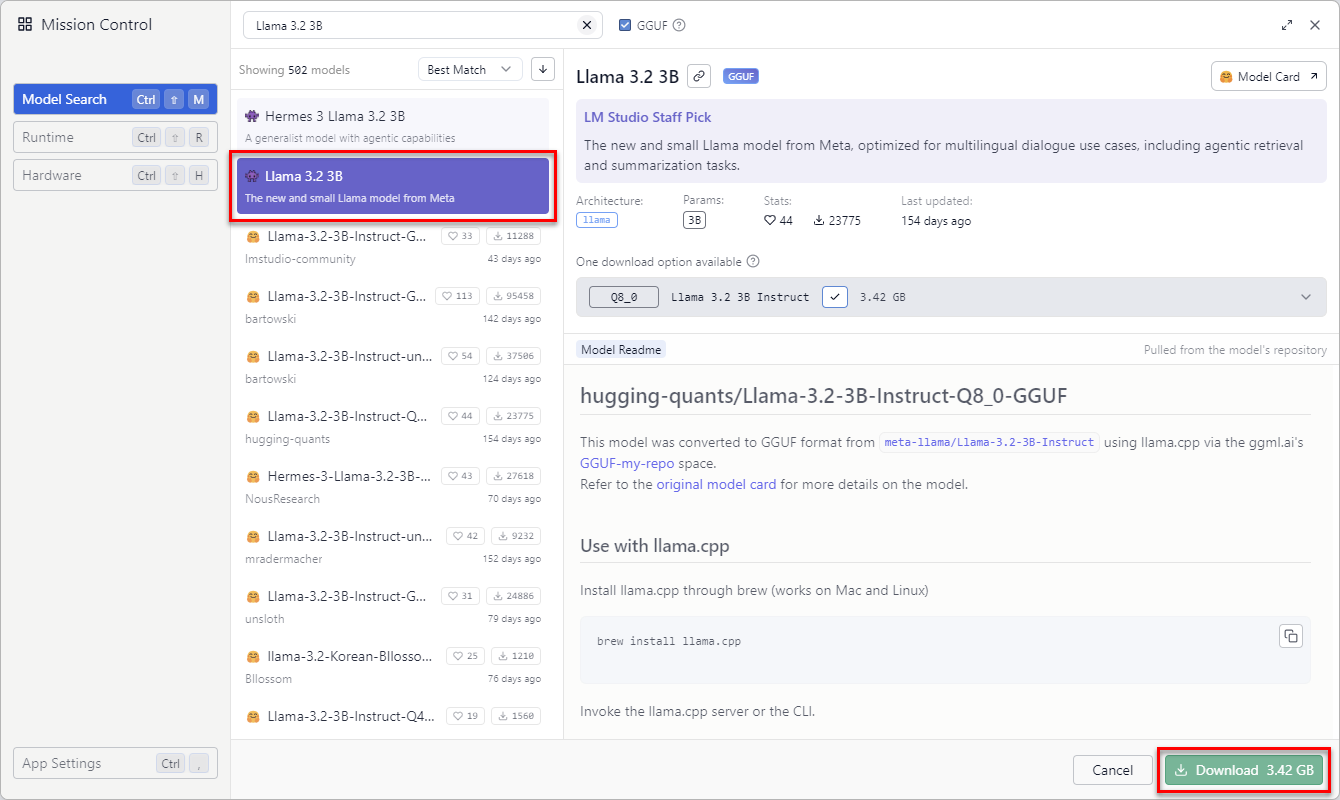

In LM Studio, in the sidebar, select Discover.

Find and select the model you want to use, and click Download. For example, to get started with a small model, select Llama 3.2 3B.

After the download completes, the model is available for prompting in LM Studio.

You have installed LM Studio and downloaded your first model. For more information about working with models, see the LM Studio documentation.

Start and configure the LM Studio server

In LM Studio, in the sidebar, select Developer.

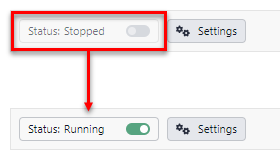

Click the Status toggle to change the status from Stopped to Running. You have started the LM Studio server.

Click Settings.

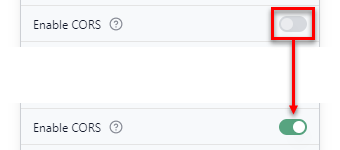

Click the Enable CORS toggle to enable the setting.

note

noteBy default, the LM Studio server only accepts same-origin requests. Since GPT for Work always has a different origin from the LM Studio server, you must enable cross-origin resource sharing (CORS) for the server.

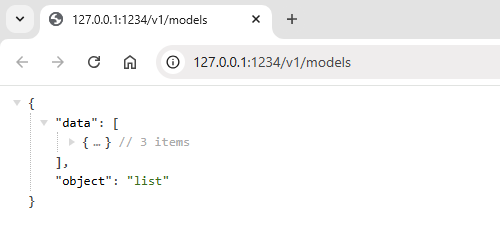

To verify that the

/v1/modelsendpoint of the LM Studio server works, open http://127.0.0.1:1234/v1/models.GPT for Work uses the endpoint to fetch a list of models installed on the server. If the endpoint works, the server returns a JSON object with a

dataproperty listing all currently installed models:

You have started and configured the LM Studio server.

You have completed the setup required to access LM Studio from GPT for Work on the same machine. You can now set http://127.0.0.1:1234 as the local server URL in GPT for Work.