Set up LM Studio on macOS

This guide walks you through the main steps of setting up LM Studio for use in GPT for Work on macOS.

This guide assumes that you use GPT for Work on the same machine that hosts LM Studio.

Prerequisites

-

Mac user account with administrator (sudo) privileges

-

Installed software:

To set up LM Studio on macOS:

(Optional) Enable HTTPS for the LM Studio server.

Install LM Studio and download a model

-

Download and run the macOS installer. Follow the on-screen instructions to complete the installation.

-

Run LM Studio.

-

On the welcome screen, click Skip.

-

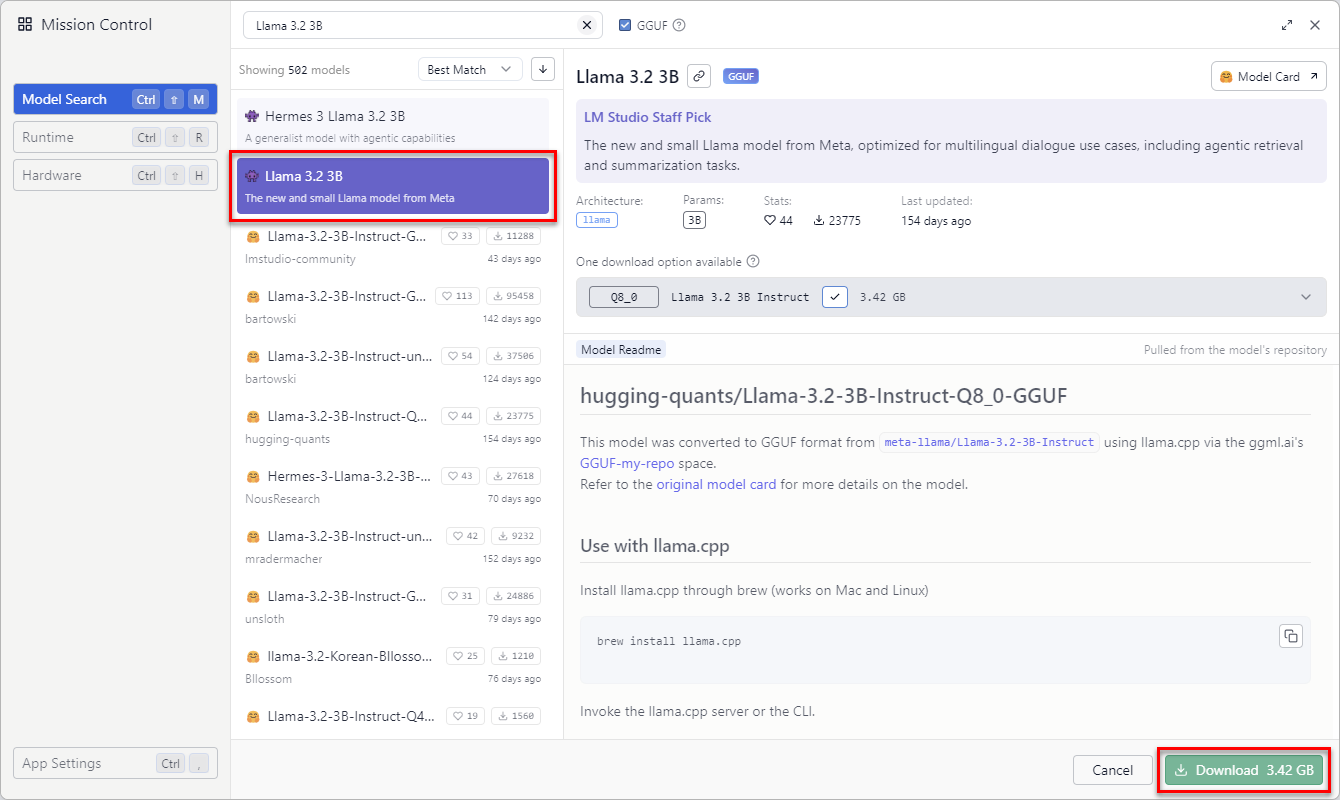

In LM Studio, in the sidebar, select Discover.

-

Find and select the model you want to use, and click Download. For example, to get started with a small model, select Llama 3.2 3B.

After the download completes, the model is available for prompting in LM Studio.

You have installed LM Studio and downloaded your first model. For more information about working with models, see the LM Studio documentation.

Start and configure the LM Studio server

-

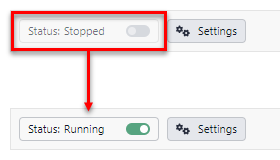

In LM Studio, in the sidebar, select Developer.

-

Click the Status toggle to change the status from Stopped to Running. You have started the LM Studio server.

-

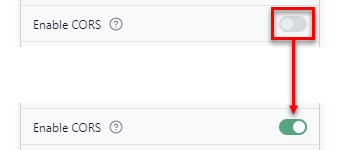

Click Settings.

-

Click the Enable CORS toggle to enable the setting.

note

noteBy default, the LM Studio server only accepts same-origin requests. Since GPT for Work always has a different origin from the LM Studio server, you must enable cross-origin resource sharing (CORS) for the server.

-

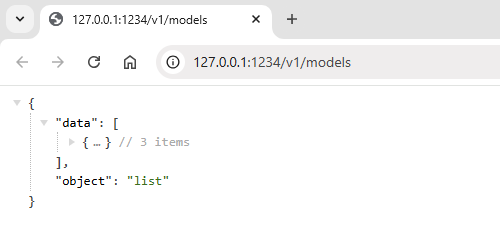

To verify that the

/v1/modelsendpoint of the LM Studio server works, open http://127.0.0.1:1234/v1/models.GPT for Work uses the endpoint to fetch a list of models installed on the server. If the endpoint works, the server returns a JSON object with a

dataproperty listing all currently installed models:

You have started and configured the LM Studio server.

You have completed the minimum setup required to access LM Studio from GPT for Work on the same machine. You can now set http://127.0.0.1:1234 as the endpoint URL in GPT for Work provided the add-in is not running on Safari or on Microsoft Excel or Word.

If you use GPT for Work on Safari, or on Microsoft Excel or Word, enable HTTPS for the LM Studio server.

Enable HTTPS for the LM Studio server

The LM Studio server uses HTTP to serve models, while GPT for Work runs on HTTPS. By default, therefore, any request from GPT for Work to the server is a mixed-content request (HTTP vs. HTTPS). Modern web browsers do not allow mixed content, as a rule. The only exceptions are mixed-content requests to http://127.0.0.1 and http://localhost, which most browsers treat as safe and therefore allow. Safari, however, blocks mixed-content requests even on the current machine.

To avoid mixed content, you must make the LM Studio server accessible over HTTPS if you use GPT for Work from:

-

Excel for the web or Word for the web on Safari

-

Excel for Mac (uses Safari)

-

Word for Mac (uses Safari)

You make the LM Studio server accessible over HTTPS by setting up a reverse proxy that hides the server behind an HTTPS interface. This guide uses nginx to set up the reverse proxy.

You can also use Cloudflare Tunnel, ngrok, or a similar cloud-based tunneling service to set up HTTPS with minimal configuration. Note that with a cloud-based service your traffic will be routed through an external service over the internet.

To set up the reverse proxy:

-

Install nginx:

brew install nginx -

Create a self-signed SSL certificate for the LM Studio server:

-

Change to the nginx configuration directory (varies based on your Mac version):

# Mac with an Apple silicon processor

cd /opt/homebrew/etc/nginx

# Mac with an Intel processor

cd /usr/local/etc/nginx -

Generate the certificate:

openssl req \

-x509 \

-newkey rsa:2048 \

-nodes \

-sha256 \

-days 365 \

-keyout lm-studio.key \

-out lm-studio.crt \

-subj '/CN=127.0.0.1' \

-extensions extensions \

-config <(printf "[dn]\nCN=127.0.0.1\n[req]\ndistinguished_name=dn\n[extensions]\nsubjectAltName=DNS:localhost,IP:127.0.0.1\nkeyUsage=digitalSignature\nextendedKeyUsage=serverAuth")The command generates two files in the current directory:

-

lm-studio.crt: Public self-signed certificate -

lm-studio.key: Private key used to sign the certificate

-

-

Add the certificate to trusted root certificates:

sudo security add-trusted-cert -d -r trustRoot -k /Library/Keychains/System.keychain ./lm-studio.crt

-

-

Create a site configuration for the LM Studio server:

-

Open the

nginx.conffile in your preferred text editor. The file is in the nginx configuration directory:# Mac with an Apple silicon processor

/opt/homebrew/etc/nginx/nginx.conf

# Mac with an Intel processor

/usr/local/etc/nginx/nginx.conf -

Replace the content of the file with the following configuration:

events {}

http {

server {

listen 127.0.0.1:1235 ssl;

server_name 127.0.0.1;

http2 on;

ssl_certificate lm-studio.crt;

ssl_certificate_key lm-studio.key;

location / {

proxy_pass http://127.0.0.1:1234;

# Keep connection to backend alive.

proxy_http_version 1.1;

proxy_set_header Connection '';

}

}

}The configuration uses port

1235for HTTPS. Requests tohttps://127.0.0.1:1235are forwarded to the LM Studio server running athttp://127.0.0.1:1234. -

Save and close the file.

-

Test the nginx configuration:

sudo nginx -tResponse if the configuration is valid:

-

Start nginx:

sudo nginx

-

-

Verify that the

/v1/modelsendpoint of the LM Studio server works over the HTTPS connection:curl -k https://127.0.0.1:1235/v1/modelsGPT for Work uses the endpoint to fetch a list of models installed on the server. If the endpoint works, the server returns a JSON object with a

dataproperty listing all currently installed models:

You have enabled HTTPS for the LM Studio server.

You have completed the setup required to access LM Studio from GPT for Work on the same machine. You can now set https://127.0.0.1:1235 as the endpoint URL in GPT for Work, including when the add-in is running on Safari or on Microsoft Excel or Word.