How your balance is consumed

Your balance in GPT for Excel, GPT for Word, and GPT for Sheets decreases as you consume tokens (except for the GPT_WEB function, which is priced per 1000 executions).

Token consumption includes:

- Input = Text or images sent to the model providers, including custom instructions, prompt templates that we use under the hood (which are very small)

- Output = Results returned by the model providers

Cost of input and output tokens can be found on our pricing page. Use the cost estimator to estimate what your use case will cost.

Example of token consumption

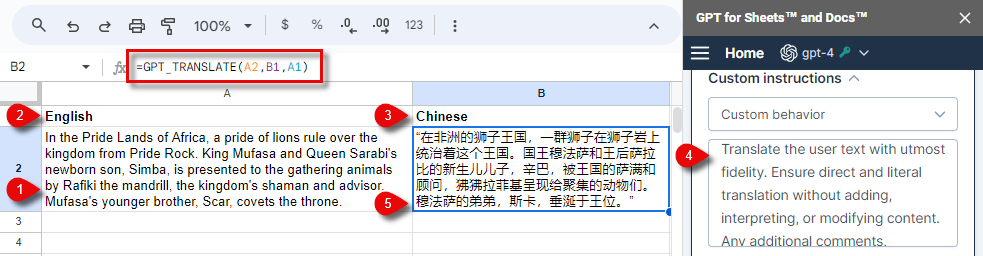

Consider a GPT formula, translating some source text (1) from English (2) to Chinese (3). We provide some Custom instructions in GPT for Sheets sidebar (4). The output (5) consists of the translated text.

Here is how the various elements from the formula and sidebar break down into input tokens:

| Input | Value | # tokens | |

|---|---|---|---|

| Text | In the Pride Lands of Africa, a pride of lions rule over the kingdom from Pride Rock. King Mufasa and Queen Sarabi's newborn son, Simba, is presented to the gathering animals by Rafiki the mandrill, the kingdom's shaman and advisor. Mufasa's younger brother, Scar, covets the throne. | 70 tokens |

| Source language | English | 1 token |

| Target language | Chinese | 1 token |

| Custom instructions | Translate the user text with utmost fidelity. Ensure direct and literal translation without adding, interpreting, or modifying content. Any additional comments, opinions, or deviations are explicitly disallowed. | 35 tokens |

| Prompt template | Prompt templates are sent along with your own input to describe the task to be performed by the AI and the expected output format. | 19 tokens |

The number of output tokens is only based on the translated text, displayed as the result of your formula:

| Output | Value | # tokens | |

|---|---|---|---|

| Text | 在非洲的狮子王国中,一群狮子统治着自豪岩上的王国。国王姆法萨和王后萨拉比的新生儿儿子辛巴由国王国的神秘顾问和萨满——拉菲基(Rafiki)猴子猿(mandrill)呈现给了聚集在一起的动物们。姆法萨的弟弟斯卡恩觊觎王位。 | 146 tokens |

Unlike other functions, the GPT_MATCH function's output doesn't account as tokens.

Token count and languages

Token counts vary by language because the way text is split into tokens differs from one language to another.

On average (figures from this study):

- English: 1 word ≈ 1.3 tokens

- French: 1 word ≈ 2 tokens

- German: 1 word ≈ 2.1 tokens

- Spanish: 1 word ≈ 2.1 tokens

- Chinese: 1 word ≈ 2.5 tokens

- Russian: 1 word ≈ 3.3 tokens

- Vietnamese: 1 word ≈ 3.3 tokens

- Arabic: 1 word ≈ 4 tokens

- Hindi: 1 word ≈ 6.4 tokens

Refer to the study for other languages.

These figures are presented for estimation purposes only and are not guaranteed.

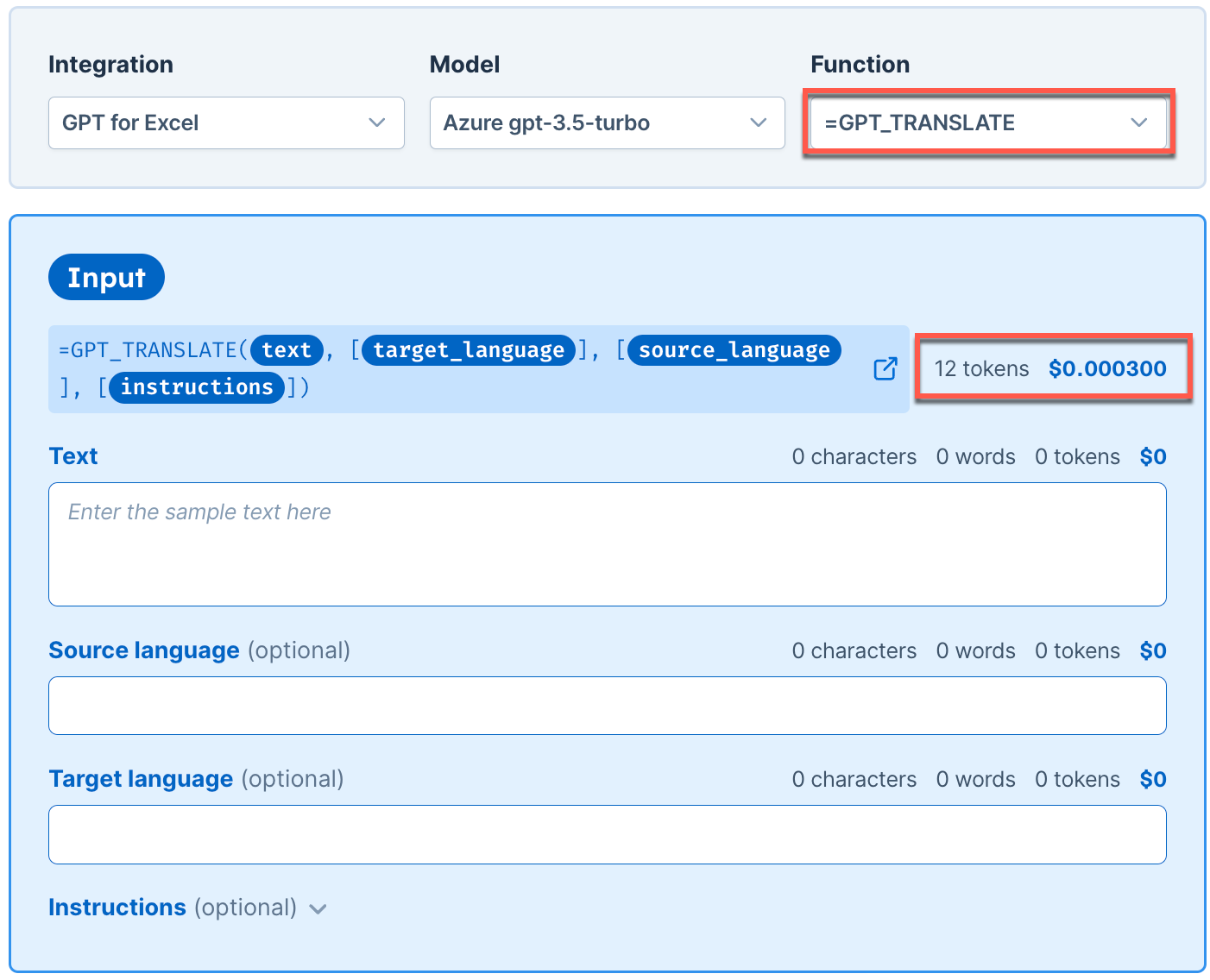

Prompt template tokens

A prompt template is like a fill-in-the-blank guide used by GPT for Work extensions in the background to shape AI responses. It presents a structured format with blanks or placeholders for your input. After filling these blanks, the customized template is sent to the model provider, directing how the AI should use and respond to your input.

For GPT for Docs, prompt templates use approximately 10 tokens

For GPT for Word, prompt templates use approximately 60 tokens

For GPT for Sheets and GPT for Excel functions, prompt templates vary according to the function executed.

To find the token count for each function prompt template, visit the cost estimator and select a function. The number of input tokens shown when the input fields are empty is the token count for the prompt template.